Create a Cloud DataProc Cluster

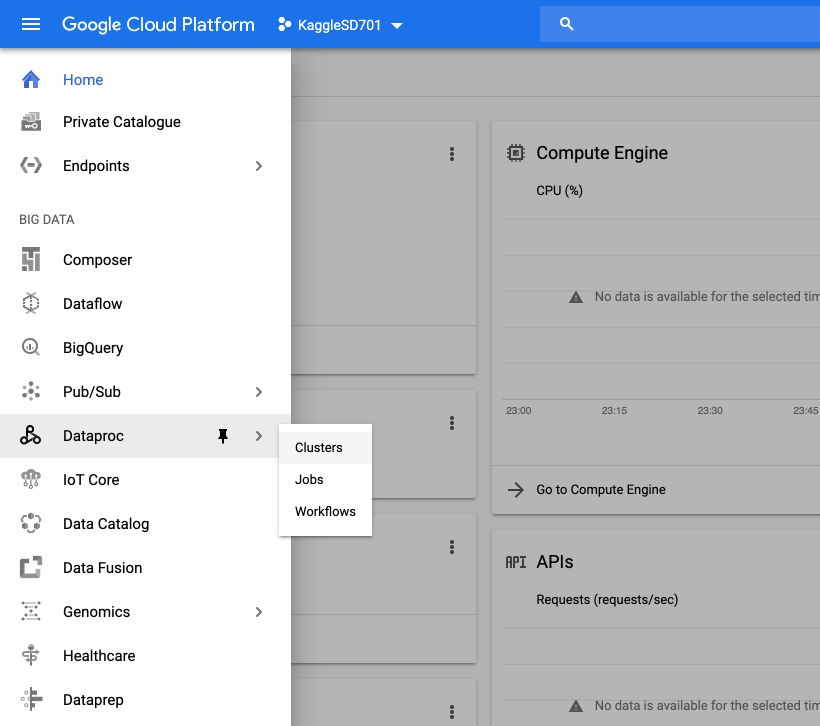

To create a DataProc cluster, from the GCP Console, go to DataProc :

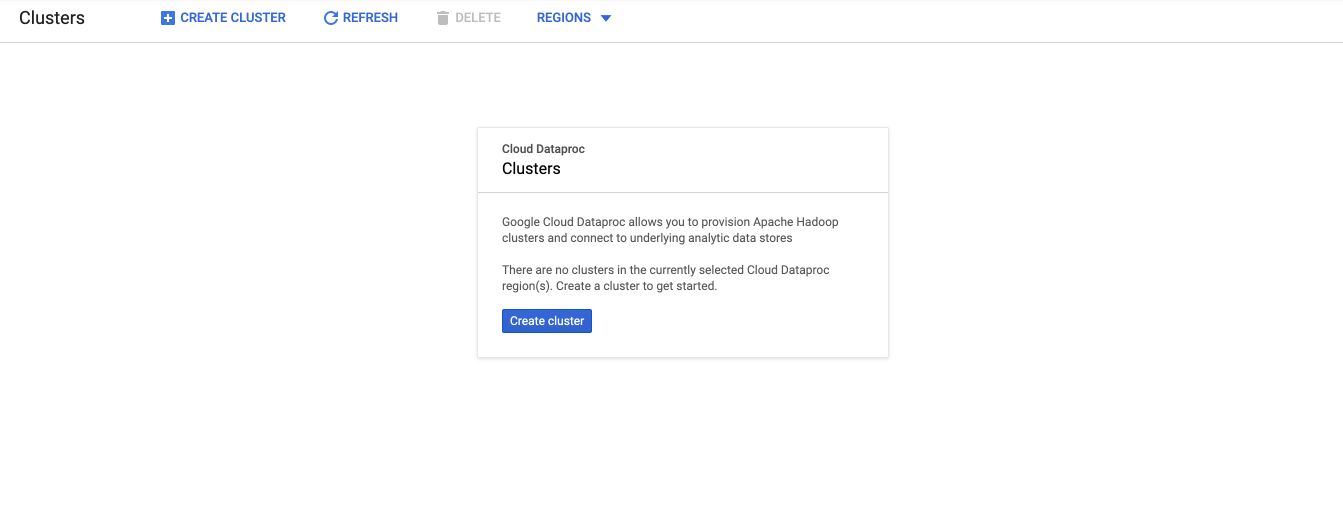

If needed, click on Enable API. Then, click on Create Cluster :

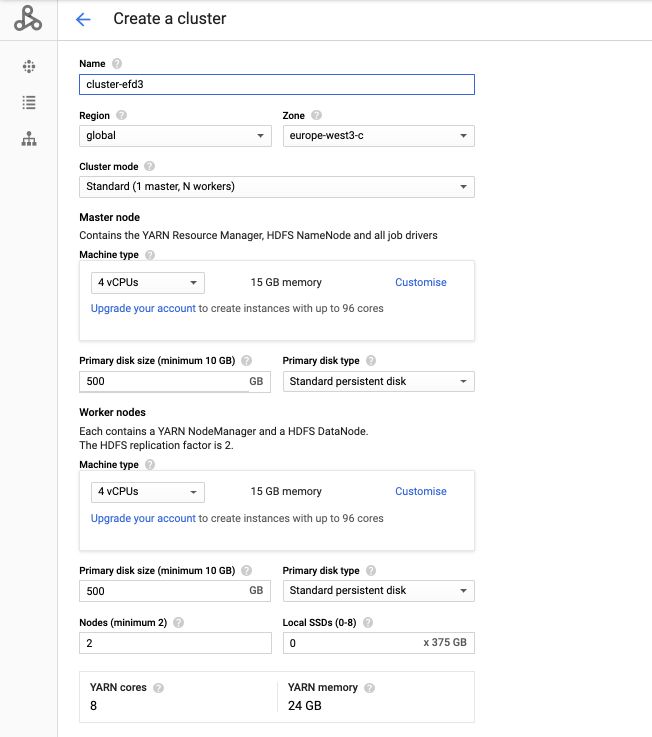

Select the name of the cluster, and the zone. The zone corresponds to where you want the computations to be done. A usual rule is to set the storage and the computation in the same region. It’s usually faster and less expensive this way.

Then, regarding the Master, you’ll have to choose between :

- Single node for experimentation

- Standard with 1 Master only

- Highly Available with 3 Masters (for long jobs)

HDFS storage is available for storage, but choosing Cloud Storage is often easier.

The default replication factor in HDFS on DataProc is 2. You can also install custom softwares on the master and the workers.

google4551492_student@training-vm:~$ cd ~

google4551492_student@training-vm:~$ nano myenv

google4551492_student@training-vm:~$ source myenv

google4551492_student@training-vm:~$ echo $PROJECT_ID

qwiklabs-gcp-a877680de40fd80b

google4551492_student@training-vm:~$ echo $MYREGION $MYZONE

us-central1 us-central1-a

google4551492_student@training-vm:~$ echo $BUCKET

qwiklabs-gcp-a877680de40fd80b

google4551492_student@training-vm:~$ echo $BROWSER_IP

195.132.52.140

google4551492_student@training-vm:~$